Dense Layer with Linear activation causes clone stream later not working

Created by: ChiRuiChen

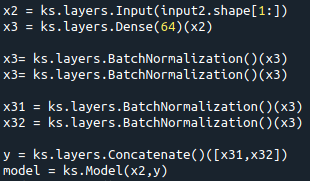

So I tried a simple model with a Dense layer with BatchNorm afterward that has multiple outputs. I know the model doesn't make sense, I just want to try out the clone stream function.

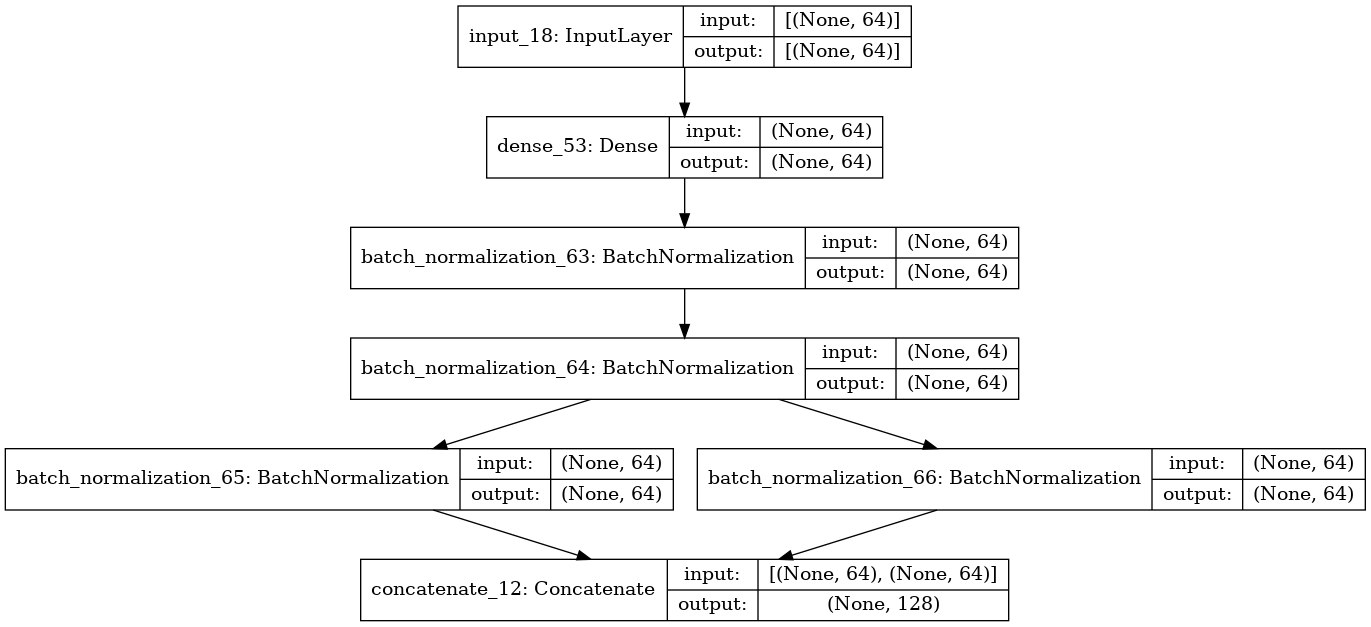

So I print out the model's streams variables

Usually, it should create clone stream variables, but instead, the 2 BatchNorm layers after the dense layer are skipped.

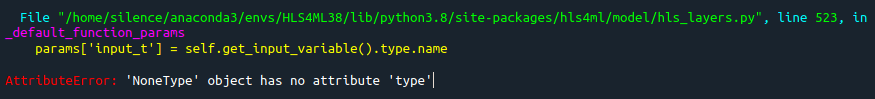

Therefore comes out this error:

The problem is solved after I set the activation function of the Dense layer to RELU.

Any suggestion?