Binary/ternary dense network

Created by: jngadiub

Add support for binary dense network.

Tested with this model (equivalent to default 3 layer model + batch normalization)

https://user-images.githubusercontent.com/5583089/40817230-f029045e-6516-11e8-8447-fe48c65dcf2c.png https://github.com/hls-fpga-machine-learning/keras-training/blob/master/models/models.py#L105

Test with <16,6> precision and these inputs

0.63117, 0.676428, 0.0257138, 0.00082122, 0.0243723, 0.000579686, 0.336411, 0.163256, 0.336411, 0.610527, 0.593471, 0.534274, 0.706917, 0.566858, 0.0145026, 0.136126the results are

hls: -0.734375 -0.0185547 -0.549805 -0.886719 -1.17383

keras: -0.73436 -0.00826645 -0.547652 -0.889665 -1.18085 Compare latency and resources below.

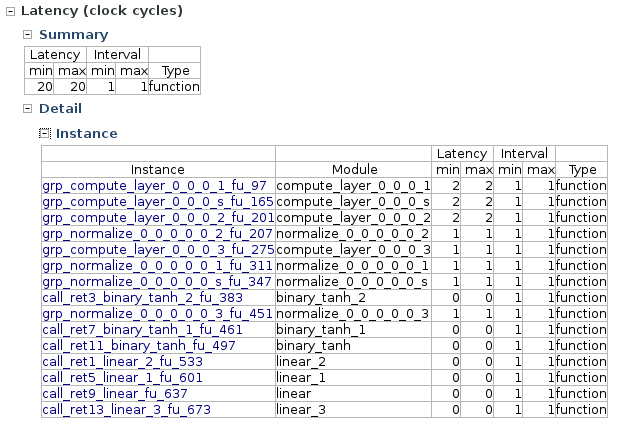

- Latency for binary dense model

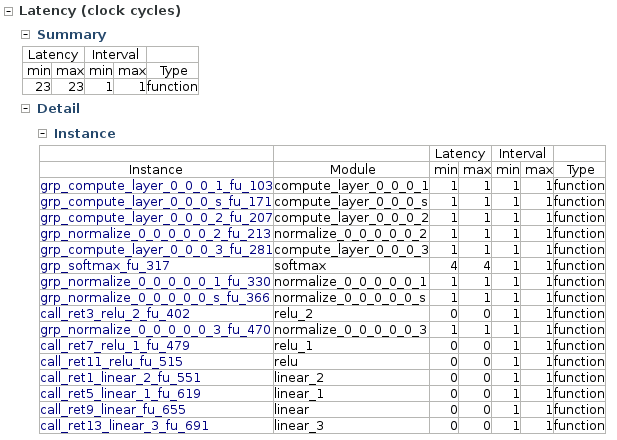

- Latency for dense model

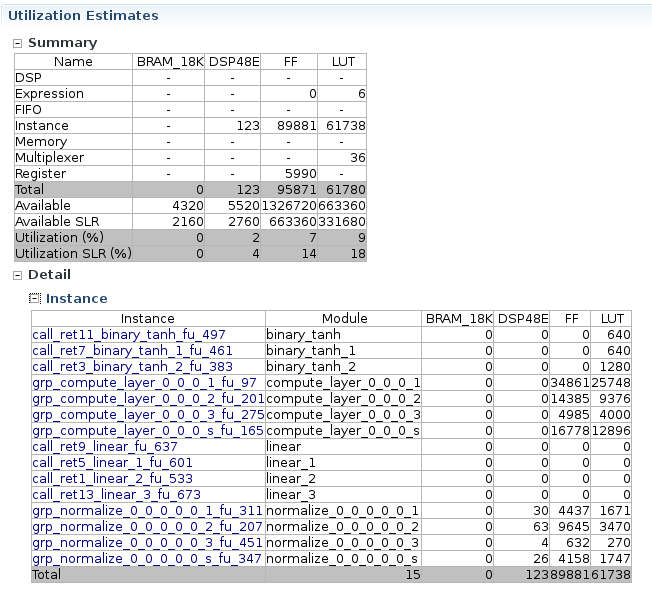

- Resources for binary dense model

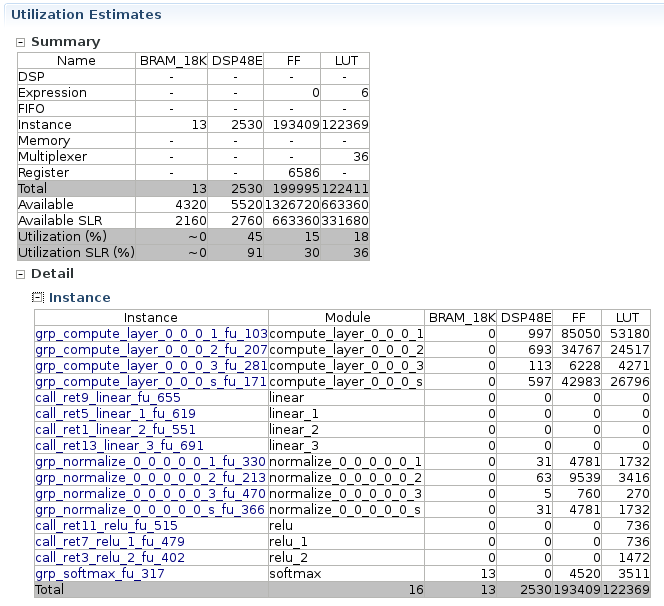

- Resources for dense model

The physics performance for this binary dense model are worse by about 10% wrt the default dense model. Better performance can be obtained by increasing the number of parameters. I have a bigger binary dense model with 10x more parameters but it takes long time to synthetize it and it might crash at the end. Perhaps 2-5x more parameters it is sufficient to recover the performance.

The ternary dense model should be straightforward to add as well. I'll update the PR with it next.