Commits on Source (219)

Showing

- .gitlab-ci.yml 10 additions, 10 deletions.gitlab-ci.yml

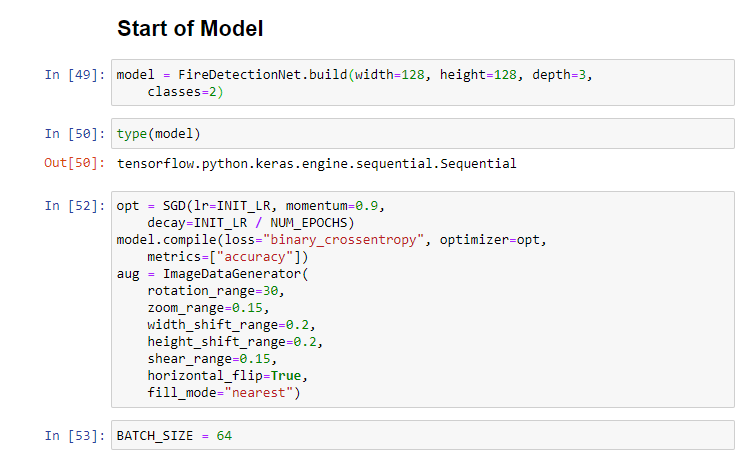

- ClassificationExample.ipynb 2324 additions, 0 deletionsClassificationExample.ipynb

- Dockerfile 92 additions, 10 deletionsDockerfile

- Evaluator.ipynb 387 additions, 0 deletionsEvaluator.ipynb

- ImageLoader.ipynb 18329 additions, 0 deletionsImageLoader.ipynb

- README.md 176 additions, 0 deletionsREADME.md

- Test_Loader.ipynb 4493 additions, 0 deletionsTest_Loader.ipynb

- dockerBasedci.yaml 15 additions, 0 deletionsdockerBasedci.yaml

- expTest.ipynb 2352 additions, 0 deletionsexpTest.ipynb

- extraction.sh 4 additions, 0 deletionsextraction.sh

- firedata1.npy 0 additions, 0 deletionsfiredata1.npy

- imageLoader.py 70 additions, 0 deletionsimageLoader.py

- kerasDeloyment.yaml 58 additions, 0 deletionskerasDeloyment.yaml

- nonfiredata1.npy 0 additions, 0 deletionsnonfiredata1.npy

- screenshots/THEOTHEREND.PNG 0 additions, 0 deletionsscreenshots/THEOTHEREND.PNG

- screenshots/eng.PNG 0 additions, 0 deletionsscreenshots/eng.PNG

- screenshots/execinto.PNG 0 additions, 0 deletionsscreenshots/execinto.PNG

- screenshots/firstBatch.PNG 0 additions, 0 deletionsscreenshots/firstBatch.PNG

- screenshots/hickups.PNG 0 additions, 0 deletionsscreenshots/hickups.PNG

- screenshots/init.png 0 additions, 0 deletionsscreenshots/init.png

ClassificationExample.ipynb

0 → 100644

This diff is collapsed.

Evaluator.ipynb

0 → 100644

This diff is collapsed.

ImageLoader.ipynb

0 → 100644

This diff is collapsed.

Test_Loader.ipynb

0 → 100644

This diff is collapsed.

dockerBasedci.yaml

0 → 100644

expTest.ipynb

0 → 100644

This diff is collapsed.

extraction.sh

0 → 100644

firedata1.npy

0 → 100644

File added

imageLoader.py

0 → 100644

kerasDeloyment.yaml

0 → 100644

nonfiredata1.npy

0 → 100644

File added

screenshots/THEOTHEREND.PNG

0 → 100755

47.7 KiB

screenshots/eng.PNG

0 → 100644

15.2 KiB

screenshots/execinto.PNG

0 → 100644

5.4 KiB

screenshots/firstBatch.PNG

0 → 100644

12.9 KiB

screenshots/hickups.PNG

0 → 100644

11.7 KiB

screenshots/init.png

0 → 100644

29.7 KiB